介绍

手册:https://docs.ceph.com/en/latest/

ceph可以实现的存储方式:

块存储:提供像普通硬盘一样的存储,为使用者提供“硬盘”

文件系统存储:类似于NFS的共享方式,为使用者提供共享文件夹

对象存储:像百度云盘一样,需要使用单独的客户端

ceph还是一个分布式的存储系统,非常灵活。如果需要扩容,只要向ceph集中增加服务器即可。ceph存储数据时采用多副本的方式进行存储,生产环境下,一个文件至少要存3份。ceph默认也是三副本存储。

ceph的构成

Ceph OSD 守护进程: Ceph OSD 用于存储数据。此外,Ceph OSD 利用 Ceph 节点的 CPU、内存和网络来执行数据复制、纠删代码、重新平衡、恢复、监控和报告功能。存储节点有几块硬盘用于存储,该节点就会有几个osd进程。

Ceph Mon监控器: Ceph Mon维护 Ceph 存储集群映射的主副本和 Ceph 存储群集的当前状态。监控器需要高度一致性,确保对Ceph 存储集群状态达成一致。维护着展示集群状态的各种图表,包括监视器图、 OSD 图、归置组( PG )图、和 CRUSH 图。

MDSs: Ceph 元数据服务器( MDS )为 Ceph 文件系统存储元数据。

RGW:对象存储网关。主要为访问ceph的软件提供API接口。

ceph集群安装

1、环境:

操作系统: Rocky8.4

2、ceph版本

15.2.17 octopus (stable)

3、初始化工作(三台机器同时操作):

3.1、关闭防火墙:

systemctl stop firewalld && systemctl disable firewalld3.1关闭防火墙:

setenforce 0 && sed -i 's/SELINUX=enforcing/SELINUX=disabled/g' /etc/selinux/config

[root@ceph-admin ~]# cat /etc/selinux/config

# This file controls the state of SELinux on the system.

# SELINUX= can take one of these three values:

# enforcing - SELinux security policy is enforced.

# permissive - SELinux prints warnings instead of enforcing.

# disabled - No SELinux policy is loaded.

SELINUX=disabled

# SELINUXTYPE= can take one of these three values:

# targeted - Targeted processes are protected,

# minimum - Modification of targeted policy. Only selected processes are protected.

# mls - Multi Level Security protection.

SELINUXTYPE=targeted3.3 设置时间同步(很重要,不成功会影响存储创建):

[root@ceph-admin ~]# dnf install chrony

[root@ceph-admin ~]# cat /etc/chrony.conf

# 使用公共 NTP 服务器

server 0.pool.ntp.org iburst

server 1.pool.ntp.org iburst

server 2.pool.ntp.org iburst

server 3.pool.ntp.org iburst

# 允许本地网络内的客户端同步时间

allow 192.168.50.0/24

# 其他配置项

driftfile /var/lib/chrony/drift

makestep 1.0 3

rtcsync

keyfile /etc/chrony.keys

leapsectz right/UTC

logdir /var/log/chrony

[root@ceph-node01 ~]# cat /etc/chrony.conf

# 使用公共 NTP 服务器

server 192.168.50.66 iburst

# 允许本地网络内的客户端同步时间

allow 192.168.50.0/24

# 其他配置项

driftfile /var/lib/chrony/drift

makestep 1.0 3

rtcsync

keyfile /etc/chrony.keys

leapsectz right/UTC

logdir /var/log/chrony

[root@ceph-node02 ~]# cat /etc/chrony.conf

# 使用公共 NTP 服务器

server 192.168.50.66 iburst

# 允许本地网络内的客户端同步时间

allow 192.168.50.0/24

# 其他配置项

driftfile /var/lib/chrony/drift

makestep 1.0 3

rtcsync

keyfile /etc/chrony.keys

leapsectz right/UTC

logdir /var/log/chrony

#重启时间同步服务

systemctl restart chronyd

systemctl enable chronyd

验证:

[root@ceph-node01 ~]# chronyc sources -v

.-- Source mode '^' = server, '=' = peer, '#' = local clock.

/ .- Source state '*' = current best, '+' = combined, '-' = not combined,

| / 'x' = may be in error, '~' = too variable, '?' = unusable.

|| .- xxxx [ yyyy ] +/- zzzz

|| Reachability register (octal) -. | xxxx = adjusted offset,

|| Log2(Polling interval) --. | | yyyy = measured offset,

|| \ | | zzzz = estimated error.

|| | | \

MS Name/IP address Stratum Poll Reach LastRx Last sample

===============================================================================

^* ceph-admin 3 6 17 47 -26ms[ -26ms] +/- 116ms

[root@ceph-node02 ~]# chronyc sources -v

.-- Source mode '^' = server, '=' = peer, '#' = local clock.

/ .- Source state '*' = current best, '+' = combined, '-' = not combined,

| / 'x' = may be in error, '~' = too variable, '?' = unusable.

|| .- xxxx [ yyyy ] +/- zzzz

|| Reachability register (octal) -. | xxxx = adjusted offset,

|| Log2(Polling interval) --. | | yyyy = measured offset,

|| \ | | zzzz = estimated error.

|| | | \

MS Name/IP address Stratum Poll Reach LastRx Last sample

===============================================================================

^* ceph-admin 3 6 17 36 -2690us[-1076us] +/- 100ms

3.4 增加ceph安装所需要的yum源

ceph源

[root@ceph-admin ~]# cat /etc/yum.repos.d/ceph.repo

[ceph]

name=Ceph packages for $basearch

baseurl=https://mirrors.tuna.tsinghua.edu.cn/ceph/rpm-15.2.17/el8/x86_64

enabled=1

gpgcheck=0

[ceph-noarch]

name=Ceph noarch packages

baseurl=https://mirrors.tuna.tsinghua.edu.cn/ceph/rpm-15.2.17/el8/noarch/

enabled=1

gpgcheck=0

[ceph-source]

name=Ceph source packages

baseurl=https://mirrors.tuna.tsinghua.edu.cn/ceph/rpm-15.2.17/el8/SRPMS

enabled=1

gpgcheck=0

epel源(不装的话会导致安装不了ceph)

dnf install epel-release -y3.5 设置主机名:

hostnamectl set-hostname ceph-admin

hostnamectl set-hostname ceph-node01

hostnamectl set-hostname ceph-node023.6 修改域名解析文件:

[root@ceph-admin ~]# cat /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

192.168.50.66 ceph-admin s3.myorg

192.168.50.67 ceph-node01

192.168.50.68 ceph-node023.7 安装ceph所需要的依赖

yum install -y python3 ceph-common device-mapper-persistent-data lvm2 3.8安装docker(三台机器同时操作):(Rokcy8中默认有podman)

yum install -y yum-utils

yum-config-manager --add-repo https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

yum install docker-ce-3:19.03.15 docker-ce-cli-1:19.03.15-3.el8 containerd.io -y

mkdir -p /etc/docker

tee /etc/docker/daemon.json <<-'EOF'

{

"registry-mirrors": ["https://fxt824bw.mirror.aliyuncs.com"]

}

EOF

#验证

[root@ceph-admin ~]# docker -v

Docker version 19.03.15, build 99e3ed8919

#启动docker

systemctl start docker && systemctl enable docker

4、安装cephadm(ceph-admin节点操作)部署集群:

4.1安装cephadm:

yum install cephadm -y[root@ceph-admin ~]# cephadm bootstrap --mon-ip 192.168.50.66

Verifying podman|docker is present...

Verifying lvm2 is present...

Verifying time synchronization is in place...

Unit chronyd.service is enabled and running

Repeating the final host check...

podman|docker (/usr/bin/docker) is present

systemctl is present

lvcreate is present

Unit chronyd.service is enabled and running

Host looks OK

Cluster fsid: d33d7a0c-9a5a-11ef-a4d0-000c2910f6bd

Verifying IP 192.168.50.66 port 3300 ...

Verifying IP 192.168.50.66 port 6789 ...

Mon IP 192.168.50.66 is in CIDR network 192.168.50.0/24

Pulling container image quay.io/ceph/ceph:v15...

Extracting ceph user uid/gid from container image...

Creating initial keys...

Creating initial monmap...

Creating mon...

Waiting for mon to start...

Waiting for mon...

mon is available

Assimilating anything we can from ceph.conf...

Generating new minimal ceph.conf...

Restarting the monitor...

Setting mon public_network...

Creating mgr...

Verifying port 9283 ...

Wrote keyring to /etc/ceph/ceph.client.admin.keyring

Wrote config to /etc/ceph/ceph.conf

Waiting for mgr to start...

Waiting for mgr...

mgr not available, waiting (1/10)...

mgr not available, waiting (2/10)...

mgr not available, waiting (3/10)...

mgr not available, waiting (4/10)...

mgr is available

Enabling cephadm module...

Waiting for the mgr to restart...

Waiting for Mgr epoch 4...

Mgr epoch 4 is available

Setting orchestrator backend to cephadm...

Generating ssh key...

Wrote public SSH key to to /etc/ceph/ceph.pub

Adding key to root@localhost's authorized_keys...

Adding host ceph-admin...

Deploying mon service with default placement...

Deploying mgr service with default placement...

Deploying crash service with default placement...

Enabling mgr prometheus module...

Deploying prometheus service with default placement...

Deploying grafana service with default placement...

Deploying node-exporter service with default placement...

Deploying alertmanager service with default placement...

Enabling the dashboard module...

Waiting for the mgr to restart...

Waiting for Mgr epoch 12...

Mgr epoch 12 is available

Generating a dashboard self-signed certificate...

Creating initial admin user...

Fetching dashboard port number...

Ceph Dashboard is now available at:

URL: https://ceph-admin:8443/

User: admin

Password: 3r2yy3pja4

You can access the Ceph CLI with:

sudo /usr/sbin/cephadm shell --fsid d33d7a0c-9a5a-11ef-a4d0-000c2910f6bd -c /etc/ceph/ceph.conf -k /etc/ceph/ceph.client.admin.keyring

Please consider enabling telemetry to help improve Ceph:

ceph telemetry on

For more information see:

https://docs.ceph.com/docs/master/mgr/telemetry/

Bootstrap complete.

拉取下来的镜像

[root@ceph-admin ~]# docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

quay.io/ceph/ceph v15 93146564743f 2 years ago 1.2GB

quay.io/ceph/ceph-grafana 6.7.4 557c83e11646 3 years ago 486MB

quay.io/prometheus/prometheus v2.18.1 de242295e225 4 years ago 140MB

quay.io/prometheus/alertmanager v0.20.0 0881eb8f169f 4 years ago 52.1MB

quay.io/prometheus/node-exporter v0.18.1 e5a616e4b9cf 5 years ago 22.9MB

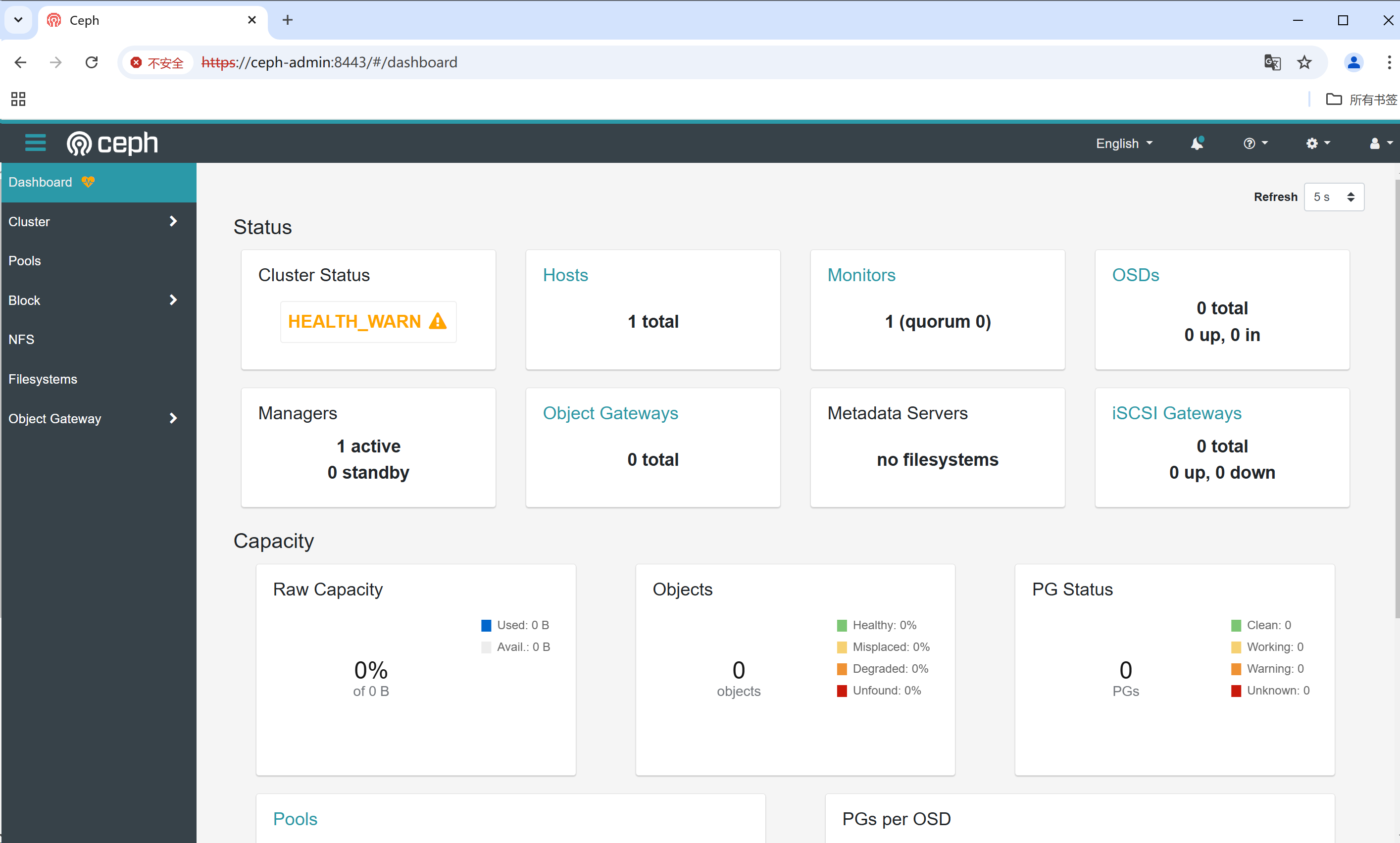

通过URL可以访问到可视化界面,输入用户名和密码即可进入界面

其他主机加入集群:

[root@ceph-admin ~]# cephadm shell

Inferring fsid d33d7a0c-9a5a-11ef-a4d0-000c2910f6bd

Inferring config /var/lib/ceph/d33d7a0c-9a5a-11ef-a4d0-000c2910f6bd/mon.ceph-admin/config

Using recent ceph image quay.io/ceph/ceph@sha256:c08064dde4bba4e72a1f55d90ca32df9ef5aafab82efe2e0a0722444a5aaacca

[ceph: root@ceph-admin /]#ceph cephadm get-pub-key >/etc/ceph/ceph.pub

[ceph: root@ceph-admin /]#ssh-copy-id -f -i /etc/ceph/ceph.pub root@ceph-node01

[ceph: root@ceph-admin /]#ssh-copy-id -f -i /etc/ceph/ceph.pub root@ceph-node02

[ceph: root@ceph-admin /]# ceph orch host add ceph-node01

Added host 'ceph-node01'

[ceph: root@ceph-admin /]# ceph orch host add ceph-node02

Added host 'ceph-node02'

[ceph: root@ceph-admin /]#

等待一段时间(可能需要等待的时间比较久,新加入的主机需要拉取需要的镜像和启动容器实例),查看集群状态:

[ceph: root@ceph-admin /]# ceph -s

cluster:

id: d33d7a0c-9a5a-11ef-a4d0-000c2910f6bd

health: HEALTH_WARN

OSD count 0 < osd_pool_default_size 3

services:

mon: 3 daemons, quorum ceph-admin,ceph-node01,ceph-node02 (age 8m)

mgr: ceph-admin.wllglw(active, since 36m), standbys: ceph-node01.kqedae

osd: 0 osds: 0 up, 0 in

data:

pools: 0 pools, 0 pgs

objects: 0 objects, 0 B

usage: 0 B used, 0 B / 0 B avail

pgs:

6.4部署OSD

这边我每台节点都添加60G大小的硬盘,将集群中所有的空闲磁盘分区全部部署到集群中:

[ceph: root@ceph-admin /]# ceph orch daemon add osd ceph-admin:/dev/sdb

Created osd(s) 0 on host 'ceph-admin'

[ceph: root@ceph-admin /]# ceph orch daemon add osd ceph-node01:/dev/sdb

Created osd(s) 1 on host 'ceph-node01'

[ceph: root@ceph-admin /]# ceph orch daemon add osd ceph-node02:/dev/sdb

Created osd(s) 2 on host 'ceph-node02'

[ceph: root@ceph-admin /]# lsblk

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT

sda 8:0 0 20G 0 disk

|-sda1 8:1 0 2M 0 part

|-sda2 8:2 0 512M 0 part /rootfs/boot

`-sda3 8:3 0 19.5G 0 part

|-rl-root 253:0 0 14.5G 0 lvm /rootfs

`-rl-home 253:1 0 5G 0 lvm /rootfs/home

sdb 8:16 0 60G 0 disk

`-ceph--638af38f--b7ec--4a88--a7f0--c7826ac3091f-osd--block--bda1263f--2e07--424b--9630--926a5ab0defa 253:2 0 60G 0 lvm

sr0 11:0 1 1024M 0 rom

再次查看集群状态

[ceph: root@ceph-admin /]# ceph -s

cluster:

id: d33d7a0c-9a5a-11ef-a4d0-000c2910f6bd

health: HEALTH_OK

services:

mon: 3 daemons, quorum ceph-admin,ceph-node01,ceph-node02 (age 12m)

mgr: ceph-admin.wllglw(active, since 41m), standbys: ceph-node01.kqedae

osd: 3 osds: 3 up (since 59s), 3 in (since 59s)

task status:

data:

pools: 1 pools, 1 pgs

objects: 0 objects, 0 B

usage: 3.0 GiB used, 177 GiB / 180 GiB avail

pgs: 1 active+clean

可视化界面验证查看集群

创建mon和mgr

ceph集群一般默认会允许存在5个mon和2个mgr;可以使用ceph orch apply mon --placement="3 node1 node2 node3"进行手动修改

[root@ceph-admin ~]# ceph orch apply mon --placement="3 ceph-admin ceph-node01 ceph-node02"

Scheduled mon update...

[root@ceph-admin ~]# ceph orch apply mgr --placement="3 ceph-admin ceph-node01 ceph-node02"

Scheduled mgr update...

[root@ceph-admin ~]# ceph orch ls

NAME RUNNING REFRESHED AGE PLACEMENT IMAGE NAME IMAGE ID

alertmanager 1/1 1s ago 97m count:1 quay.io/prometheus/alertmanager:v0.20.0 0881eb8f169f

crash 3/3 6m ago 97m * quay.io/ceph/ceph:v15 93146564743f

grafana 1/1 1s ago 97m count:1 quay.io/ceph/ceph-grafana:6.7.4 557c83e11646

mgr 3/3 6m ago 9s ceph-admin;ceph-node01;ceph-node02;count:3 quay.io/ceph/ceph:v15 93146564743f

mon 3/3 6m ago 2m ceph-admin;ceph-node01;ceph-node02;count:3 quay.io/ceph/ceph:v15 93146564743f

node-exporter 3/3 6m ago 97m * quay.io/prometheus/node-exporter:v0.18.1 e5a616e4b9cf

osd.None 3/0 6m ago - <unmanaged> quay.io/ceph/ceph:v15 93146564743f

prometheus 1/1 1s ago 97m count:1 quay.io/prometheus/prometheus:v2.18.1 de242295e225

创建mds

[root@ceph-admin ~]# ceph osd pool create cephfs_data

pool 'cephfs_data' created

[root@ceph-admin ~]# ceph osd pool create cephfs_metadata

pool 'cephfs_metadata' created

[root@ceph-admin ~]# ceph fs new cephfs cephfs_metadata cephfs_data

new fs with metadata pool 3 and data pool 2

[root@ceph-admin ~]# ceph orch apply mds cephfs --placement="3 ceph-admin ceph-node01 ceph-node02"

Scheduled mds.cephfs update...

[root@ceph-admin ~]# ceph orch ps --daemon-type mds

NAME HOST STATUS REFRESHED AGE VERSION IMAGE NAME IMAGE ID CONTAINER ID

mds.cephfs.ceph-admin.gachjc ceph-admin running (16s) 13s ago 16s 15.2.17 quay.io/ceph/ceph:v15 93146564743f f11fe6823047

mds.cephfs.ceph-node01.hslbck ceph-node01 running (17s) 14s ago 17s 15.2.17 quay.io/ceph/ceph:v15 93146564743f 3ee432d4df1f

mds.cephfs.ceph-node02.fvozbb ceph-node02 running (15s) 14s ago 15s 15.2.17 quay.io/ceph/ceph:v15 93146564743f b6c651827aeb

创建RBD

[root@ceph-admin ~]# ceph osd pool create rbd 128

pool 'rbd' created

[root@ceph-admin ~]# ceph -s

cluster:

id: d33d7a0c-9a5a-11ef-a4d0-000c2910f6bd

health: HEALTH_OK

services:

mon: 3 daemons, quorum ceph-admin,ceph-node01,ceph-node02 (age 33m)

mgr: ceph-admin.wllglw(active, since 4h), standbys: ceph-node01.kqedae, ceph-node02.khyqgz

mds: cephfs:1 {0=cephfs.ceph-node01.hslbck=up:active} 2 up:standby

osd: 3 osds: 3 up (since 4h), 3 in (since 4h)

data:

pools: 4 pools, 193 pgs

objects: 22 objects, 2.2 KiB

usage: 3.0 GiB used, 177 GiB / 180 GiB avail

pgs: 193 active+clean

[root@ceph-admin ~]# ceph osd pool ls

device_health_metrics

cephfs_data

cephfs_metadata

rbd

[root@ceph-admin ~]# ceph osd pool application enable rbd rbd

enabled application 'rbd' on pool 'rbd'

[root@ceph-admin ~]# rbd create rdbtest --size 3G --pool rbd --image-format 2 --image-feature layering

[root@ceph-admin ~]# rbd ls --pool rbd -l --format json --pretty-format

[

{

"image": "rdbtest",

"id": "389d220bb28a",

"size": 3221225472,

"format": 2

}

]

[root@ceph-admin ~]# rbd --image rdbtest -p rbd info

rbd image 'rdbtest':

size 3 GiB in 768 objects

order 22 (4 MiB objects)

snapshot_count: 0

id: 389d220bb28a

block_name_prefix: rbd_data.389d220bb28a

format: 2

features: layering

op_features:

flags:

create_timestamp: Mon Nov 4 16:16:19 2024

access_timestamp: Mon Nov 4 16:16:19 2024

modify_timestamp: Mon Nov 4 16:16:19 2024

创建RBD用户

[ceph: root@ceph-admin ceph]# ceph auth add client.cjt mon 'allow r' osd 'allow rw pool=rbd'

added key for client.cjt

[ceph: root@ceph-admin ceph]# ceph auth get client.cjt

exported keyring for client.cjt

[client.cjt]

key = AQCAeylnpNpgDxAA1N3+kBao9qIZ0XDbUza8QA==

caps mon = "allow r"

caps osd = "allow rw pool=rbd"

[ceph: root@ceph-admin ceph]# ceph auth get client.cjt -o ceph.client.cjt.keyring

exported keyring for client.cjt

[ceph: root@ceph-admin ceph]# ls

ceph.client.cjt.keyring ceph.conf ceph.keyring rbdmap

[ceph: root@ceph-admin ceph]# ls

ceph.client.cjt.keyring ceph.conf ceph.keyring rbdmap

[ceph: root@ceph-admin ceph]# scp ceph.client.cjt.keyring root@192.168.50.130:/etc/ceph

The authenticity of host '192.168.50.130 (192.168.50.130)' can't be established.

ECDSA key fingerprint is SHA256:3+rTm86R7U6DEPbNx3aGZkUfY8WxgSbSGjr3aBONCcg.

Are you sure you want to continue connecting (yes/no/[fingerprint])? yes

Warning: Permanently added '192.168.50.130' (ECDSA) to the list of known hosts.

root@192.168.50.130's password:

ceph.client.cjt.keyring 100% 115 146.8KB/s 00:00

分发凭据给client端

root@cephadm-deploy:/ceph# scp ceph.client.wgsrbd.keyring root@ceph-client:/etc/ceph客户端访问集群配置(版本最好一致都是version15.2.17)

ceph源

[root@ceph-admin ~]# cat /etc/yum.repos.d/ceph.repo

[ceph]

name=Ceph packages for $basearch

baseurl=https://mirrors.tuna.tsinghua.edu.cn/ceph/rpm-15.2.17/el8/x86_64

enabled=1

gpgcheck=0

[ceph-noarch]

name=Ceph noarch packages

baseurl=https://mirrors.tuna.tsinghua.edu.cn/ceph/rpm-15.2.17/el8/noarch/

enabled=1

gpgcheck=0

[ceph-source]

name=Ceph source packages

baseurl=https://mirrors.tuna.tsinghua.edu.cn/ceph/rpm-15.2.17/el8/SRPMS

enabled=1

gpgcheck=0

epel源安装

yum install epel-release -yyum install -y ceph-common客户端使用普通用户测试访问集群

#试用普通用户访问ceph集群

[root@controller ceph]# ceph --user cjt -s

cluster:

id: d33d7a0c-9a5a-11ef-a4d0-000c2910f6bd

health: HEALTH_OK

services:

mon: 3 daemons, quorum ceph-admin,ceph-node01,ceph-node02 (age 57m)

mgr: ceph-admin.wllglw(active, since 57m), standbys: ceph-node02.khyqgz, ceph-node01.kqedae

mds: cephfs:1 {0=cephfs.ceph-admin.gachjc=up:active} 2 up:standby

osd: 3 osds: 3 up (since 57m), 3 in (since 22h)

data:

pools: 4 pools, 97 pgs

objects: 26 objects, 3.3 KiB

usage: 3.2 GiB used, 177 GiB / 180 GiB avail

pgs: 97 active+clean

普通用户挂载rbd

[ceph: root@ceph-admin ceph]# ceph auth caps client.cjt mon 'allow r' osd 'allow rwx pool=rbd'

updated caps for client.cjt

[root@controller ceph]# sudo rbd map rbd/rdbtest --user cjt

/dev/rbd0

[root@controller ceph]# lsblk /dev/rbd0

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT

rbd0 252:0 0 3G 0 disk

[root@controller ceph]# mkfs.xfs /dev/rbd0

Discarding blocks...Done.

meta-data=/dev/rbd0 isize=512 agcount=8, agsize=98304 blks

= sectsz=512 attr=2, projid32bit=1

= crc=1 finobt=0, sparse=0

data = bsize=4096 blocks=786432, imaxpct=25

= sunit=1024 swidth=1024 blks

naming =version 2 bsize=4096 ascii-ci=0 ftype=1

log =internal log bsize=4096 blocks=2560, version=2

= sectsz=512 sunit=8 blks, lazy-count=1

realtime =none extsz=4096 blocks=0, rtextents=0

[root@controller ceph]# mount /dev/rbd0 /mnt/

[root@controller ceph]# touch /mnt/test

[root@controller ceph]# ls /mnt/

test

[root@controller ceph]# umount /mnt

[root@controller ceph]# sudo rbd unmap rbd/rdbtest --user cjt

[root@controller ceph]# ls /mnt/

[root@controller ceph]#

其他操作如更改镜像大小、创建快照、克隆等功能,均与admin用户操作相同,只不过每条命令后边会添加--user [用户名]的操作,例如

[root@controller ceph]# rbd resize rbd/rdbtest --size 15G --user cjt

Resizing image: 100% complete...done.

[root@controller ceph]# rbd --image rdbtest -p rbd info --user cjt

rbd image 'rdbtest':

size 15 GiB in 3840 objects

order 22 (4 MiB objects)

snapshot_count: 0

id: 389d220bb28a

block_name_prefix: rbd_data.389d220bb28a

format: 2

features: layering

op_features:

flags:

create_timestamp: Mon Nov 4 16:16:19 2024

access_timestamp: Mon Nov 4 16:16:19 2024

modify_timestamp: Mon Nov 4 16:16:19 2024